Joint Object-Material Category Segmentation from Audio-Visual Cues

Anurag Arnab,

Michael Sapienza,

Stuart Golodetz,

Ondrej Miksik,

Shahram Izadi

and

Philip H.S. Torr

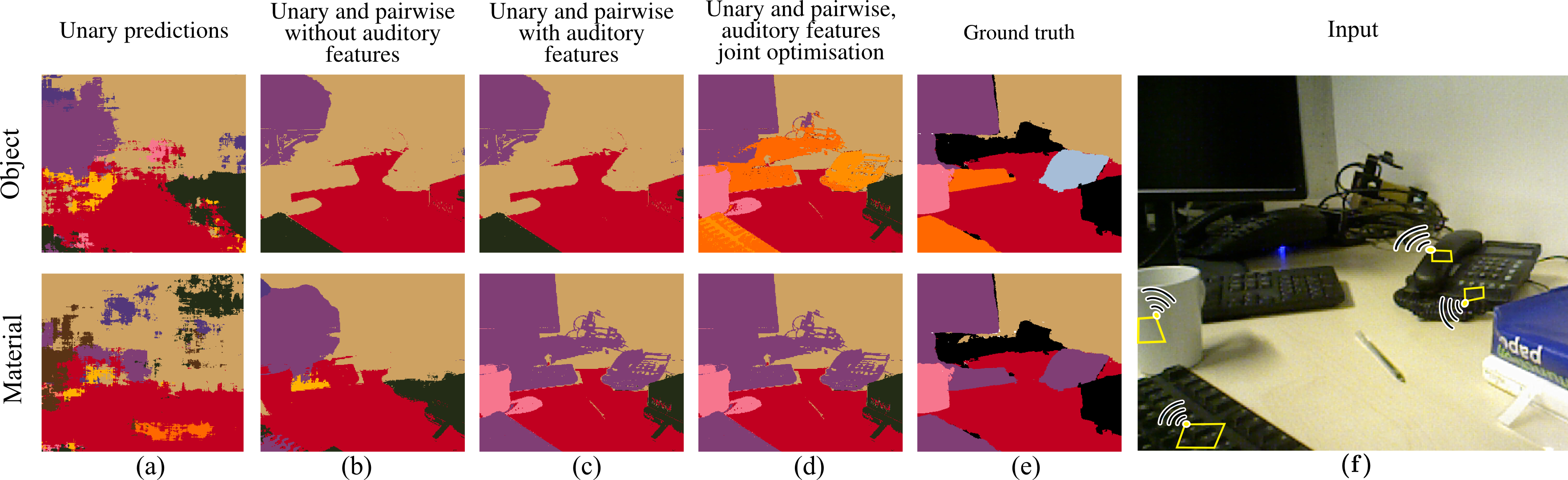

Abstract: It is not always possible to recognise objects and infer material properties for a scene from visual cues alone, since objects can look visually similar whilst being made of very different materials. In this paper, we therefore present an approach that augments the available dense visual cues with sparse auditory cues in order to estimate dense object and material labels. Since estimates of object class and material properties are mutually informative, we optimise our multi-output labelling jointly using a random-field framework. We evaluate our system on a new dataset with paired visual and auditory data that we make publicly available. We demonstrate that this joint estimation of object and material labels significantly outperforms the estimation of either category in isolation.

title={Joint Object-Material Category Segmentation from Audio-Visual Cues},

author={Anurag Arnab and Michael Sapienza and Stuart Golodetz and Julien Valentin and Ondrej Miksik and Shahram Izadi and Philip H. S. Torr},

year={2015},

booktitle={Proceedings of the British Machine Vision Conference (BMVC)},

doi={10.5244/C.29.40},

}